#Logistic Regression

#雖然名為迴歸,但常⽤於分類(⼆元或多類別)

from sklearn import preprocessing, linear_model

import pandas as pd

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cross_validation import train_test_split

plt.style.use('ggplot')

plt.rcParams['font.family']='SimHei' #⿊體

df2=pd.read_csv("kaggle_titanic_train.csv",encoding="big5") #鐵達尼

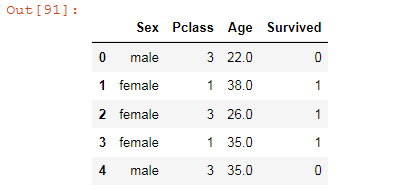

df3=df2[['Sex','Pclass','Age','Survived']] #用'Sex','Pclass','Age' 三個變數預測'Survived'(存活率)

df3.head()

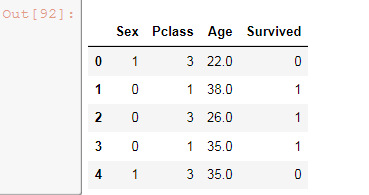

# 創造 dummy variables 將SEX轉換成 0,1

label_encoder = preprocessing.LabelEncoder()

encoded_Sex = label_encoder.fit_transform(df3["Sex"])

df3["Sex"]=encoded_Sex

df3.head()

#切分訓練 測試資料

x=df3[['Sex','Pclass','Age']]

y=df3[['Survived']]

x_train,x_test,y_train,y_test=train_test_split(x,y,test_size=0.3,random_state=20170816) #random_state 種子值

x_train

#標準化 :為了避免偏向某個變數去做訓練

from sklearn.preprocessing import StandardScaler

sc=StandardScaler()

sc.fit(x_train)

x_train_nor=sc.transform(x_train)

x_test_nor=sc.transform(x_test)

#訓練資料分類效果(3個參數)

from sklearn.linear_model import LogisticRegression

lr=LogisticRegression()

lr.fit(x_train_nor,y_train)

# 印出係數

print(lr.coef_)

# 印出截距

print(lr.intercept_ )

output:

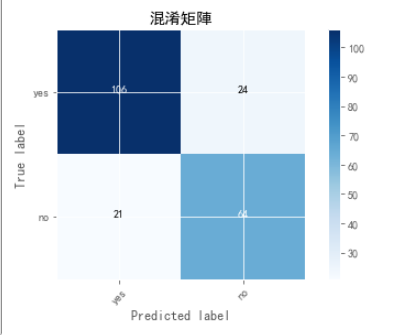

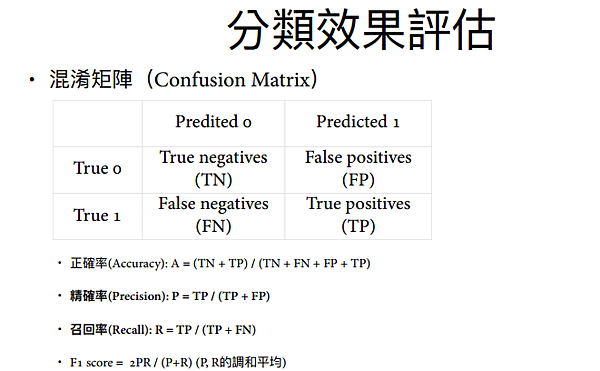

混淆矩陣的評估 如下圖:

因此,此邏吉斯回規模型的準確率為:

Accuracy: 106+64/106+64+24+21 =0.79 (79%的準確率)

PS: 要使用視覺化混淆矩陣要先執行以下的code (官網提供的)

#plot confusion matrix 官網提供

def plot_confusion_matrix(cm, classes,

normalize=False,

title='Confusion matrix',

cmap=plt.cm.Blues):

"""

This function prints and plots the confusion matrix.

Normalization can be applied by setting `normalize=True`.

"""

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

print('Confusion matrix, without normalization')

print(cm)

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, format(cm[i, j], fmt),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.tight_layout()

plt.ylabel('True label')

plt.xlabel('Predicted label')

留言列表

留言列表